-

Posts

470 -

Joined

-

Last visited

-

Days Won

33

Content Type

Profiles

Forums

Updates

Release Notes

Store

Everything posted by TheNoobPolice

-

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

The only reason for your observations is because the Windows Enhance Pointer Precision (EPP) algorithm works on distance not speed. The original documentation for the function (which was first designed for Windows XP) is here and there is a further accurate breakdown of the small changes applied for Windows 7 here. It has not been changed since. You'll see there is no mention of dividing by the time between each actual poll time (i.e to find input speed) with this algorithm, because it does not happen. There is nothing inherently wrong with this approach - in fact, with a mouse that has significantly variable polling stability (as even many "gaming" mice can often have), it will produce more stable gain through the transfer function and is undoubtedly the best approach for an office mouse use case. It should then be obvious though why 8000khz doesn't accelerate as much as 1000hz - if someone is travelling in a car at a steady 10 km/h and only reports how far they have travelled after 1 hour, then the reported distance is 10 km (obviously). If the report is every millisecond, however, it would be just 2.8 millimetres every time. If one samples any movement more times a second but doesn't divide by the time between the samples, then the distance each sample is smaller. If we base the input to somefunction() only from distance per report while we move at speed, then of course a higher report rate has a smaller input to somefunction(). The same thing applies with EPP. Since the sensitivity scale factor is derived from distance only, then if one was at 8000hz polling rate and 400 DPI, one will never experience any acceleration at all unless moving the mouse at least 20 inches per second or faster, as that is the required hand speed at that DPI & polling rate to get a single input with at least "2" counts in it in order to trigger a higher sensitivity on the acceleration curve (whereas if the user had only 500hz and a much higher DPI like 3200, then the sensitivity will begin accelerating at just 0.16 inches per second). So this is the only reason why EPP scales differently as you change polling rate and it's intentional by design. It is nothing to do with worsening precision of the desktop cursor and it is not a poorly designed function technically. The only issues with it are that it is not adequately user configurable for power users via the interface, and there is no visualisation for the end user of how the cursor sensitivity scales without doing some math (which while not rocket science, is not the garden variety either). -

Changing monitor size always changes your perceived sensitivity, you can compensate in one way but it will change it in another. Changing monitor distance to compensate for the new size will change your navigation / 360 distance. It is usually best just to adapt to the new size over a little while. You can also compensate by increasing the FOV, but this is not always possible depending on the game's options. Or you can create a custom 24" unscaled resolution of 2275 x 1280 with black bars and play 24" in games where you are concerned you aim is different. You'll still benefit from the increased pixel density with no change in perceived sensitivity. You can then just use the 27" resolution on the desktop or in other games where aiming is not critical. Or you can return the monitor and go back to 24". Those are your only options.

-

At least for those data points, you can use these values with MDD to match them to about 6 decimal places or so

-

Care to share the math for us nerds to peruse? Or are you like that kid at school with no siblings who never let the other kids play with his stuff

-

It's a 16:9 display; so 178% is the vertical, 100% is the horizontal Another way to look at this, is if you are at 100% horizontal monitor distance for 16:9 screen, but then swap your screen to a 4:3 one, then the match point is now outside the edge of both screen axes, but the sensitivity does not change because of this.

-

No, the calculation is nothing to do with the screen really, the same scaling applies to the FOV regardless. The fact you're at 178% should be a first clue - you're already +78% over the top of the monitor. Just as the match point can be outside the edge of one side of your monitor, it can be outside the edge of both.

-

Questions about the magnification of Scopes

TheNoobPolice replied to Scca's topic in Technical Discussion

From a design perspective it's actually best when developers can use in-engine tools to design by hand what works optimally for the specific gameplay / maps / ranges etc by feel. It's incredibly unlikely that what the best / most balanced experience is just happens to be a nice round number by focal length scaling. But this then means you might get values like 1.89356% magnification or whatever, so makes sense for the UI to just round those to memorable numbers (or just use names like "close range"). At the end of the day the math should serve the experience, not the other way around. -

This is large improvement and much more readable, especially with "advanced" output selection! One request, is it possible for the side by side output view to be accessible without maximising the window entirely? Still makes sense for it to only be accessible at a minimum window width, just maximise is a bit severe on a wide screen for any browser.

-

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

“To prove csgo is affected by windows registry settings with raw input enabled, all you have to do is enter bogus windows registry settings then disable raw input in game” Flawless logic -

The Optimum Tech video on DPI latency / input lag used a flawed methodology to test movement latency as there was no normalised output distance for each tested input distance (DPI’s). He measured movement resolution, NOT latency. There is no input lag or latency effect at all for different DPI values. Imagine Usain Bolt was sprinting against a 5 year old and they both set off at exactly the same time. If we apply the same testing philosophy that we see in that video, we would only measure the time until the first foot landed on the ground, and because of their tiny little legs and narrow stride pattern, we would therefore conclude the 5 year old was the faster runner. Suffice to say, it’s not the time until first point of data, but the time to a significant target location to define “input latency” i.e input to “something”. Of course, a higher DPI would make “some first movement” faster than a low DPI at the same hand speed, as that is the definition of DPI, but presuming you have the same effective sensitivity in a game between DPI settings (i.e the same 360 distance) then the distance turned in-game is smaller for that first movement, so additional inputs (which take additional time!) would be required to turn the same distance as only one input would do from the lower DPI mouse. Once the minuscule amount of time has passed for the lower DPI mouse to send data, it updates to exactly the same position as the higher DPI mouse at exactly the same time. Saying there is a different “sensor lag” or "latency" for the natural effect of DPI is misleading - the path the data takes to your pc is exactly the same. In other words, there is no difference in input lag for different DPI values. What was tested is easily calculable with simple math and isn't required to test in this manner. If you could set the DPI to "1", and moved your hand at 1 inch per second, you would see 1000ms pass before the count was sent and their measuring devices registered some movement. This does not mean there is 1 second latency in the mouse input.

-

Monitor Distance - Dynamic is now live!

TheNoobPolice replied to DPI Wizard's topic in Technical Discussion

I don't play Valorant, but I only use MDD for in-game ADS / zoom scaling changes. I'd pretty much always set hipfire to 360 distance so my navigation feels familiar. Val is a lot more about holding angles though, so maybe the navigation is less important than the crosshair speed. I don't know, not my thing honestly. -

What you have described is 100% monitor distance. There’s an option for that for all calculations here - it’s a fairly common method used in game engines but can vary due to different FOV measurements used. But you can think of most easily as just: ADS 360 distance = hipfire 360 distance / (zoomFOV / HipFov) This will make all tracking movements more sensitive the more you zoom in though. I can tolerate it when it’s based on the vertical FOV or 4:3 horizontal, but a 16:9 horizontal FOV basis for it is way too fast for me unless I’m extremely zoomed in to like an FOV of 1

-

I imagine you are the same person who asked in our discord, but this short script works for Logitech gaming software, I imagine it also works for G-Hub if it still has the Lua scripting. If not you could downgrade to the LGS since you have an older mouse which would be supported. The last good version was 9.02.65 EnablePrimaryMouseButtonEvents(true); function OnEvent(event, arg, family) if (arg == 2) then if (event == "MOUSE_BUTTON_PRESSED") then PlayMacro ("DPI Down") else if (event == "MOUSE_BUTTON_RELEASED") then PlayMacro ("DPI Up") end end end end You would just need to create 2 DPI's only in the software to toggle between (maybe setup a new profile with them) and then enter the above code in the scripting menu replacing anything that is there. Ensure the software is kept running because scripts don't save to the mouse.

-

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

Pretty much! -

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

You literally just pasted a huge quote from him where he is talking about that exact thing and you have mentioned repeatedly about changing the "smoothmousecurves to 0" which is "the MarkC fix" . No one needs to do that anymore, at all. Like ever. It's a decade out of date. People changing SmoothMouseXCurve and SmoothMouseYCurve in the registry aka MarkC fix as a routine habit on Windows installs are simply copy/pasting decades-old internet advice. Doing so may even break things if people mess with stuff they don't understand. Raw Input is there for a reason. Off-topic. I never said anything about cs source or 1.6. Its also completely irrelevant to anyone playing csgo, which is the entire point of the discussion from the statement "CSGO has 100% FAIL sensitivity". False. It doesn't. If you wanted to talk about cs source or 1.6 and their specifics you could have started a thread doing so. Fix what? There's a reason the entry on this website says only this for CSGO: And it doesn't also say ensure you have smoothmousecurves set to 0 and all those launch arguments you mention. Because it is not required. But up to you man, if you want to ignore the relevant game engine code directly shown to you, ignore a video in this thread showing the input is not smoothed or accelerated by the owner of this website you are subscribed to, and instead go by "a lot of people feel"....then good luck. I've tried to help you with this to make you see the game is fine but if you insist on inventing problems that you then have to work around there's nothing anyone can do about that. -

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

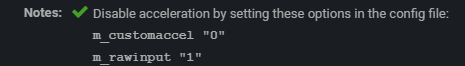

This is incorrect and just misinformation. All you need to do is enable Raw Input and ensure the in-game accel is off (m_customaccel = 0, which isn't related to the windows accel whatsoever). My motivation for pointing this out, is that a lot of fairly naïve newcomers to gaming lurk on forums like these to get info and this sort of thing is totally confusing and just completely wastes their time. Given I know for a fact you do not need to enter those launch args to get unsmoothed / unaccelerated / unfiltered mouse input - you can see exactly what those params do in the code I posted and exactly how Raw Input is being called. You can also see in this very thread a video where DPI Wizard is sending different counts distance values at different input cadences and there is no smoothing on the output as the movement frame-by-frame is identical and it ends up at exactly the same spot (so no accel either). I know you are not experiencing any smoothing at all with m_rawinput = 1 because I know it does not exist (unless you deliberately go out of your way to enable m_filter = 1) So I am genuinely curious what your motivation is to pretend otherwise. Like is it just a "I want to be right" or are you genuinely gaslighting yourself into thinking that unless you mess about in the registry there is some mystical effect on your mouse input? MarkC registry stuff is 13 years old and hasn't ever been relevant to Raw Input games. All it ever did is make it so when "Enhance Pointer Precision" checkbox is enabled in windows mouse settings, the cursor output is the same as with it disabled. You can just untick the box in windows mouse settings for the same effect, or enable Raw Input where the checkbox doesn't affect input anyway. There is probably about 3 titles in existence where it was necessary to avoid forced windows accel, none of which anyone is playing now and/or have since been updated to use Raw Input anyway. -

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

Why are you doing any of this? That launch arg just calls the WinAPI SystemParametersInfo() Get/SetMouse which is how applications control the windows mouse / EPP values if they want to, and it's storing existing values that it then first checks on init. So in other words, you are manually editing values in the registry to some value you think is going to do something for you, and then asking the game to call that and store it in a variable to use on init. I am not surprised if it breaks something with the cursor. https://github.com/ValveSoftware/source-sdk-2013/blob/master/mp/src/game/client/in_mouse.cpp#L262 The default Windows setting is to have EPP enabled, maybe why the convar m_mousespeed has been chosen to be set to default = 1? but this doesn't affect Raw Input in any case. None of those relate to the function for the raw input mouse event accumulator which overwrites the *mx / *my variables with raw input data if m_rawinput = 1 https://github.com/ValveSoftware/source-sdk-2013/blob/master/mp/src/game/client/in_mouse.cpp#L331 The order the functions are checked / applied is then here - you can see for GetMouseDelta() function the first two args are the passed-in raw input deltas. https://github.com/ValveSoftware/source-sdk-2013/blob/master/mp/src/game/client/in_mouse.cpp#L663 It checks for it's own filtering convar at that point - which defaults to 0 at the top of the file in case you were also worried about that, before passing out the output https://github.com/ValveSoftware/source-sdk-2013/blob/master/mp/src/game/client/in_mouse.cpp#L368 All you need to do in CSGO is set m_rawinput 1 and m_customaccel 0 if you don't want to use either the in-game accel or Windows EPP / WPS settings. There's no reason to enter any other convars or worry about anything else. It matters not one jot that Enhance Pointer Precision is enabled or not (m_mousespeed = 1) when the game is calling Raw Input. -

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

Yes to be clear it is common and good practice to expose the system cursor for menus and that's not what it is important here. Otherwise, you get that Doom Eternal effect where if the user has a lower WPS setting than 6/11 the cursor will be way too fast for the user compared to their desktop -

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

CS 1.6 != CSGO. Why are you talking about a different game now? If you enter some convar that changes the way mouse is handled then you are possibly overwriting how it gets mouse input. I'm not familiar with that game and the only code I have perused is this https://github.com/ValveSoftware/source-sdk-2013 that CSGO is built on. I guess 1.6 is not since it was released ten year earlier. Like I said, if the game is actually calling Raw Input (which CSGO does) then those settings don't have any effect. -

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

There's basically three ways games can get mouse input events; Raw Input, Direct Input (deprecated) and Windows Mouse. If you call GetRawInputData() or GetRawInputBuffer() (two API's variants of the same fundamental thing) it is physically impossible for those registry settings you mention to have any effect, as the (x,y) packet is obtained beneath user space, and before those functions even occur. In other words, those settings could only affect a game where Raw Input was "enabled", if the "Raw Input" object in the GUI / convar was just flat broken and basically wired to nothing so it was just calling windows mouse. This is not the case in CSGO and it is easily demonstrated it functions correctly. It would also be completely incompetent and I don't think I've seen that ever. The reason a new DPI can feel different even when normalised to the same degree/count is only because of: 1) The mouse sensor is not perfect and has a different % DPI deviation at different settings. Sometimes this can be quite significant and is very common. 2) Assuming you compensate by lowering the sensitivity after increasing DPI, you get a lower minimum angle increment, which can make very slow movements feel smoother / different. 3) If the game actually does have errors in it's sensitivity formula at certain values. There is no voodoo with this and it is easily tested. CSGO does not have any errors as per DPI Wizard's earlier post. 4) Placebo. None of that is anything to do with mouse sensitivity. Monitor refresh rates, eye tracking in motion vs stationary, screen resolution or anything else etc. Nothing I have said is out of date or behind the times - there are no developers making their FPS mouse sensitivity calculations incorporate factors relating to eye tracking of moving vs stationary objects in the current year lol. That is all just la la land. The sensitivity functions in a game will point your crosshair / camera to the exact same point in the game world even if you turn the monitor off or don't even have one connected, so there is obviously no link to that. You are almost talking as if you think the turn increment in the game has to be a division of a pixel size / distance or something? Suffice to say it doesn't. There is also no "jitter" in the game math for calculating sensitivity, it's just numbers and math is math. Any timing jitter occurs at the mouse firmware side and it's interface to the operating system due to realities of Windows timing I mentioned before - that is also not a function of screen resolution / monitor refresh rate anyway. One last point before I am done with this silly thread about things that are already completely understood lol. Any digital function has a finite resolution, and if you so wish, you can zoom into it close enough and find errors. If you blow those errors up into a big enough image on your screen it can look like something significant, and we can create a story about it for x,y,z reasons, but it's effectively just a non-existent problem. I can view an audio wave at 96khz that was original sampled at 192khz and when zooming I can see artefacts of the resolution that are not there in the 192khz source. Does this mean 96khz is awful and all our audio needs to be minimum 192khz? Of course not, even 48khz is totally fine. Because apart from those "audiophile" tosspots who insist on paying a grand per meter of cable that is soldered using the tears of a Swan and insulated with Albatross feathers, it's all just bollocks and complete unnecessary. I get the feeling that if we already had 100,000hz mice, 800 PPI screens, 10,000hz refresh rates, and 10,000FPS gaming, you'd still just be zooming-in and taking screenshots on your website saying "these microseconds are not enough, my new mouse API removes cursor micro-stutter to within the nano second! Look at the jitter your cursor has!" The masses are asses though and no doubt there's always some plonker to sell "magic beans" to, so I wish you good luck convincing them with that -

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

Hand-waving in full effect I see.... The reason 8khz doesn't work a lot of times is nothing to do with "mouse mathematics" or floats vs double precision of sensitivity variables. It is because of how games acquire input themselves. A game like Valorant only works with 8khz when it calls GetRawInputBuffer() to hold the inputs until an arbitrary buffer size is filled when the engine is ready for the input. If any app just gets WM_INPUT messages "as they come" as per a standard read of Rawinput, then unless it's pipeline is so trivial that it is only doing something like updating a counter, then it will most likely fall over with inputs spamming-in haphazardly every ~125us. The symptom is dropped packets, negative accel and / or just maxed CPU usage and stuttering. None of this is anything to do with sensitivity calculations being superior / inferior. Windows is also not a RTOS and is objectively bad once timing gets tight, it becomes extremely expensive to try and do anything accurately at these kind of timings. This is not going to change as it's a fundamental of the Windows environment. The only reason low DPI can work with 8khz in some games where high DPI doesn't, is because the DPI is nowhere near saturating the polling rate and you're getting much lower input cadence. Set your mouse to 8khz and 400 dpi and move at 1 inch per second and your update rate is 400hz (obviously) and is therefore no longer "8khz" as far as the game is concerned. This is nothing to do with the DPI setting itself, which the game has no knowledge of or interaction with as DPI Wizard already said. Most simulation loops of in-game physics, enemy positions - things like whether a crosshair is over an enemy etc will run at 60hz, maybe a really competitive FPS would run higher, and maybe they poll mouse input faster at 2 or 3 times the frame rate of the game, with the textures / graphics rendering running at the actual frame rate obviously. Usually though, you would register event callbacks from input devices which are then passed to a handler / accumulator that is then called once at the start of each frame. In other words, it does not matter if you are sending 8000 updates a second to the OS, because a game will just buffer them all and sum the total mouse distance to be called at the start of each render frame anyway - it makes no practical difference to your crosshair position whether you do this work in the firmware of the mouse at 1khz, or whether a game does it instead at 8khz. The only important factor is that the polling rate is greater than or equal to the highest frame rate of the game at a minimum. If you think using 8khz is giving 8000 discrete rotational updates of your crosshair a second, and for each of those positions an enemy location is being calculated for whether said input would be over a target or not (i.e something meaningful) then you are mad. Once we get into the future where performance improves, it is also not inevitable this will change - rather the opposite. We have, for example the precedent of "Super Audio CD" and "DVD Audio" in the audio realm which were both large increases in resolution vs CD quality on a factual basis, yet both failed as standards precisely because that level of resolution is not required for the user experience - instead, users actually gravitated towards lower resolutions (compressed audio formats) and smaller file sizes for easier distribution. Point being - if such technological improvements were available to game engine developers to do more complex computations within a smaller timeframe, they will not be using such resource to update the crosshair position more frequently. There are many things such as higher animation fidelity, better online synchronisation systems, more complex physics, rendering improvements etc which would all be much higher priority and more obvious quality gains. Either yourselves or Razer's 8k marketing team moving a cursor on a desktop and posting pictures of "micro stutter" is not going to make any user see a problem. This is unlike the actual micro-stutter that occurs in 3D rendering due to frame-cadence issues, which are readily apparent to even the uninitiated user. There is no one using a 1000hz mouse on a 144hz display and going "geez, I really hate these micro-stutters on my desktop cursor, I can't wait for that to be improved!". In short, you are inventing a problem and then trying to sell a solution / idea of a solution, and your argument effectively boils down that anyone who disagrees just doesn't have the eyes to see the problem, when the truth is no problem exists in the first place, and your solution would not even solve it if it did. Mouse report rate does not need to be synchronised to monitor refresh rate or game frame rate etc whatsoever, and insisting it would improve anything is fundamentally misunderstanding how the quantities of each are handled and how they interact with one another. Games will always render frames at arbitrary intervals because each frame has infinitely variable parameters all which require an arbitrary amount of resources, and mouse input polling on Windows will always be haphazard timewise and always has been due to the fundamental design of the operating system. Moreover, once the timings get down to a millisecond or so then there is no value to anyone in any case. No one is going to care about turning G-sync on if the monitor can run 1000hz (this is effectively the "Super Audio CD effect") and any "improved" mouse API that presumably could send decimal distance values to the OS instead of the standard (x,y) packet of integers with remainders carried to next poll, would also achieve nothing of value to anyone over existing systems that are stable, extremely well understood and established. -

If there was an objectively correct way to set it up, it wouldn't be an option in the menu. The developer would just set it internally to what is "correct". It's entirely user preference and just functions as a fine-tune aiming sensitivity slider, where the values represent a percentage of screen space instead of a coarse linear percentage of degrees / count. The same values / methods exist on this website so you can have the same feel and conversion approach from one game to another. You don't need to stick to any specific numbers, you may find 131.05% is perfect for you and not 133% for a given scope. It's all completely arbitrary.

-

High Dpi issues on old Games / Engines

TheNoobPolice replied to Quackerjack's topic in Technical Discussion

A gish gallop of nonsense. Blurbusters have a history of making extremely simple premises sound extremely complex and use sophistry and hand-waving to make sure that no one who ever actually could de-bunk it, would ever have the time or inclination to. I mean where do you start with this: "At 60Hz using a 125Hz mouse, fewer mouse math roundoff errors occured(sic). But at 500Hz refresh rate and 8000Hz mouse, especially when multiplied by a sensitivity factor, can generate enough math rounding errors occurs in one second (in floats) to create full-integer pixel misalignments!" Utter drivel I'm afraid.