-

Posts

469 -

Joined

-

Last visited

-

Days Won

33

Content Type

Profiles

Forums

Updates

Release Notes

Store

Everything posted by TheNoobPolice

-

Disable "dpi too high" message for advanced

TheNoobPolice replied to randomguy7's topic in Feedback, suggestions and bugs

Whilst the game sens at lowest value may seem the most obvious, it is not that unusual for sens values to be a bit broken at very lowest values in some games and these tend to work best at default values. However, default values can often be undesirable due to having a pixel ratio greater than 1 on very high resolution displays (which although is kind of moot really, it’s the sort of thing people still want to avoid). So I think the best option would just be for the calculator to have an advanced option to solve for the multiplier when the user inputs both target game sens and mouse DPI. This leaves it in the hands of the user what game sens value to pick in the target game, (based on the minimum | default | maximum values shown in the info section), because hardcoding the minimum value is probably not the best for all circumstances. -

Disable "dpi too high" message for advanced

TheNoobPolice replied to randomguy7's topic in Feedback, suggestions and bugs

I think what you really are effectively asking, is for the user to be able to enter the target game sensitivity (which in the above example would be the minimum of "1"), and then solve for the target DPI instead (and therefore, if with mouse DPI entered, output a scaling multiplier for said DPI). Because whichever way around it is, there needs to be 2 of the variables input to solve for the 3rd output - the calculator can't be solving for the game sensitivity based off a DPI input field, whilst also outputting a multiplier which effectively scales DPI because it's then a circular dependency. Or...perhaps, it could be a mode where the calculator automatically inputs the minimum (or default?) sens for the game, and then outputs the multiplier required? So the user inputs mouse DPI, the calculator automatically inputs minimum available in-game sens, and therefore can solve for DPI scaler? I think that could be useful in some circumstances, but I also feel that the way you are going about this in general is probably fairly unique to yourself, because changing a filter driver DPI scaler instead of the game sens has the rather conspicuous side effect of also changing your desktop cursor every time you want to play a new game. -

Disable "dpi too high" message for advanced

TheNoobPolice replied to randomguy7's topic in Feedback, suggestions and bugs

How would the calculator know what your Raw Accel sens multiplier is? Are you saying you want a filter driver DPI scaler field so you don't have to do the math? -

Disable "dpi too high" message for advanced

TheNoobPolice replied to randomguy7's topic in Feedback, suggestions and bugs

Raw Accel scales your DPI effectively, so if you're still entering your mouse DPI in the calculator when scaling it by Raw Accel, you're not really doing it right. You should enter the output DPI. -

i think you could tap into the controller market

TheNoobPolice replied to philheath's topic in Feedback, suggestions and bugs

It's fairly easy to do a "full pitch/yaw time to 360", and then calculate zoom scaling methods from the same barometer. This is how the BF USA worked on sticks. The real issue is that it's relatively meaningless. A joystick is basically "servo aim" - you move the stick to a position and the system continually aims for you effectively. You are not "aiming" yourself as far as a distance. This means that for every position on the stick, you have gotten to that position through an arbitrary small amount of time moving through lower positions, meaning the output for each stick position over time is always different, including full yaw from any starting position != full yaw (which is always). It is humanly impossible to make the exact same motion a second time. This is true regardless of whether the game adds additional stick acceleration curves for either physical stick accel or stick position. In other words, sticks always have inherent negative acceleration as you move them due to their physical properties. This is before you start with the response curve which is rarely linear by default, even if a game does not add stick acceleration. It may not matter to those wanting the conversion of course as the perception of at least "something" matched may be satisfactory, but the situations where you could take a setting from game A, move it to game B at the same FOV and it be "the same" in any meaningful way (and by meaningful, I mean "any arbitrary stick movement produces the same angle displacement over time in the game world at a given FOV") would likely only exist a handful of times within the same game franchise. -

Dealing with mouse drifting while in motion, at wits end.

TheNoobPolice replied to sqwash's topic in Off Topic Discussion

Well let's put it this way, if the symptom does indeed have the same cause as what yours is, and is indeed the same symptom, then they all absolutely don't know how mice work, because that's what happens well within normal spec and it's not up for debate. So we then have to presume they are working to fix a different issue that just has similar sounding symptoms, and / or an separate issue that simply exacerbates the very normal symptoms that you have. I don't have the inclination to read through all their posts, because I already know what your issue is. It's extremely clear and understood that you have expected behaviour, you can see in this test I shared before, that all mice drift, and usually in the same pattern with the same type of motions, even when moved in a totally consistent way by a machine (which would always produce less drift than a human hand). The difference is usually how much smoothing is applied internally or the accuracy of the sensor / implementation to begin with, along with surface differences that affect tracking performance slightly, but it never goes away entirely. The idea that all these mice from different brands, all made by different engineers in different years, all just happen to have the same "glaring bug", and they all just happened to not notice it to fix it? And you claim what I said was far fetched?! In any case, I've tried on multiple occasions now to reassure you but you don't seem interested in that. Anyone can see by moving their mouse around on the pad within screen space, and then moving the mouse back to the exact same starting position without it leaving the pad, that the cursor will now be in a different pixel on the desktop than in it's starting location. This is really nothing special or unusual I'm afraid. In any case, I've exhausted my interest on this particular topic, so I'll leave you to it. -

Dealing with mouse drifting while in motion, at wits end.

TheNoobPolice replied to sqwash's topic in Off Topic Discussion

Who knows? Maybe they just all don't know how mice work or maybe it's a slightly different issue they had. In any case, as far as your issue that you highlight and demonstrate - it's very clear what it is, it's totally understood, and totally expected. -

I think you are confusing it with the reverse icon on the calculator which swaps target game for source game. The reverse button is used to find a conversion method via existing sensitivities, instead of what the calculator usually does; which is to find sensitivities via a selected conversion method.

-

-

Dealing with mouse drifting while in motion, at wits end.

TheNoobPolice replied to sqwash's topic in Off Topic Discussion

I don't think anyone said it's intended behaviour, it's a consequence of current optical sensor technology that is unavoidable - working as expected != working as intended. I'm quite sure the boffins at Pixart don't intend to make a mouse sensor that drifts, it's just the best they can do. -

I don’t really understand what you are getting at here. The work IS already done for you on this website - that’s what the calculator is! I think in general people have the wrong impression of so-called AI (in reality, pattern matching) models that exist at the moment. It’s just linear algebra. It can’t help you with your human preferences. Good GPT prompt: “Give me the formula for so and so’s theorem in standard math notation” Bad GPT prompt: “What colour should I paint my living room?” GPT is great as a resource for facts or to pull together knowledge which has been typed before “somewhere”, but it can’t decide what kind sensitivity conversion you will prefer in a given game.

-

Pixel ratio - are you pixel skipping?

TheNoobPolice replied to DPI Wizard's topic in Technical Discussion

It's actually nothing to do with the DPI of the mouse. It's only defined by the game's sensitivity, the FOV and the screen resolution. -

This is far less trivial than you might think - you’re looking at a decent level of understanding of computer sciences in general, coding knowledge / ability to develop accurate testing methods, and a good grasp of algebra, trigonometry and a pinch of calculus thrown in. There’s a reason this site/service is successful and the only one at the quality level it is at - DPI Wizards don’t grow on trees

-

How are counts/360 and pixels/count calculated?

TheNoobPolice replied to dontjustdontok's topic in Technical Discussion

Pixel ratio is calculated by dividing the sens- scaled yaw value (I.e degrees per count) by the amount of degrees in the centre of the screen over 1 pixel’s distance at any given FOV, which can be found as follows: e.g for Counter Strike at 1920 x 1080 res: yaw = 0.022 degrees; sens = 0.5; horizontal res = 1920 pixels; horizontal FOV = 106.26 degrees; Degrees per pixel distance = 2 * atan(tan(hFOV / 2 * pi / 180) / hRes) * 180 / pi = 0.0796 degrees; Pixel ratio = yaw * sens / degrees per pixel distance = 0.138; -

How are counts/360 and pixels/count calculated?

TheNoobPolice replied to dontjustdontok's topic in Technical Discussion

With mouse input we convert a physical distance to a virtual distance. To do this we normalise both to virtual units. The "count" or "mickey" is a virtual unit defined by the DPI. If you are at 400 DPI, then 1/400th of linear inch movement, sends a "count" of 1 to the PC. At the PC side we convert these virtual count units to output distance units. This is either in pixels in 2D, or angles in 3D. The angles in a game is indeed defined by a "Yaw" value e.g. Source engine by default has 0.022 degrees - this means that 1/400th of an inch (1 count) from our mouse movement turns 0.022 degrees (the base yaw) in the game. These are our normalised input and output virtual distances from your hand motion. The sensitivity of any function is the ratio between the output and the input, therefore we can say it is a multiplier. Since we already have our normalised values, then we know the sensitivity of "1" is an input of 1/400th of inch on the mouse pad, and an output of 0.022 degrees, so a sensitivity of "0.5" would be an output of 0.011 degrees for the same input distance. This can always be calculated as outputAngle = yaw * sensitivityFunction() * counts. Therefore to solve for counts instead, we do counts = outputAngle / ( yaw * sensitivityFunction() ). Example; we have a game that has a yaw value of 0.014 degrees, sens of 1.2, and we're at 1600 DPI and want to turn 30 degrees. How many counts do we need to turn to this position? so we have 30 / ( 0.014 * 1.2 ) = 1785.71. To convert back to physical hand motion, it's therefore: 1785.71 / 1600 = 1.12 inches on the mouse pad turns 30 degrees in the game. You have to always use the same units for both outputAngle and yaw value obviously. I use the term "sensitivityFunction()" because the value exposed to the user is not always linear like in Source, so this multiplier would be the return value of whatever formula a game uses to represent this to the player, which is highly variable and completely arbitrary so is one of the main reasons for this site's existence. -

Conversion of sensitivity from 2D to 3D windows

TheNoobPolice replied to Vaccaria's topic in General Gaming Discussion

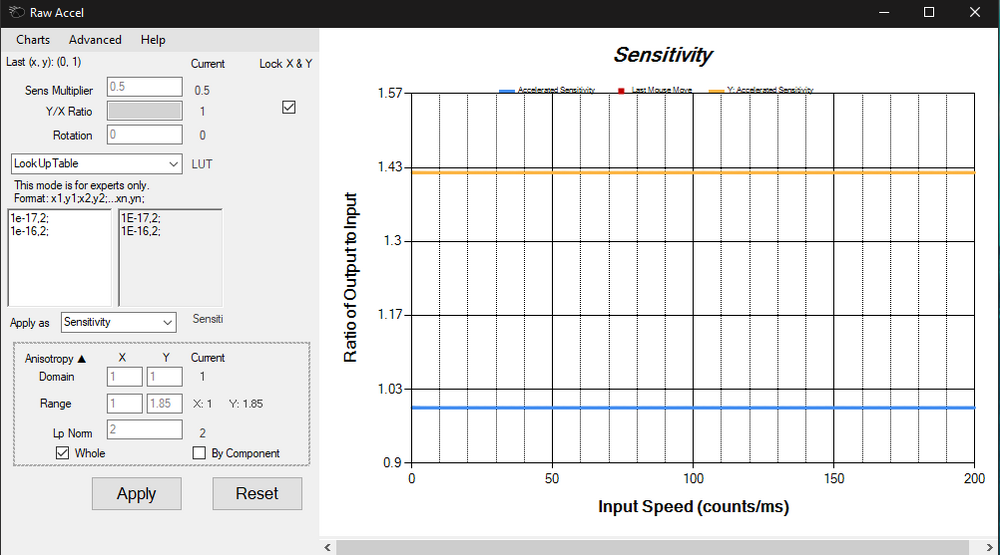

You are going about this in an overly complex way. If you want to increase vertical sens without changes in direction and without using acceleration you would really need to use something like the Bias mode I added to Custom Curve. In Raw Accel you can do similar but you kind of just have to "hack" it using the LUT mode and anisotropy by using an "instant" acceleration to a cap that all occurs below minimum input speed. You could set it as follows: LookUp Table (Sensitivity mode): 1e-17,2; 1e-16,2; Sens Multiplier = 0.5 Y Range = 2 * desired y/x ratio - 1 = 1.84972170686 in your case This creates the same graph as your y/x ratio version, but does not change diagonal directions (This shows what usually happens). This method works because we create some invisible instant acceleration between sens 1 to 2 and the range function has a formula of: (acceleratedSensitivity -1) * (rangeX + (rangeY - rangeX) * (atan(|y/x|)) / (pi/2)) + 1 or more easily understood as: "A single sensitivity applied to both axes, that is linearly scaled to each axes configured sensitivity value by the ratio of input angle to 90 degrees" The transition across angles would be entirely linear in Raw Accel, so an input of 45 degrees would have a sens of (1 + (desired y/x ratio -1) * (45/90)) = 1.212430426715, and an input of 22.5 degrees would have a sens of (1 + (desired y/x ratio - 1) * (22.5 / 90)) = 1.1062152133575, but there is only this one sensitivity applied to both axes components at all times so directionality is preserved - increasing vertical sens in this way does not make near horizontal motions less stable by "pitching up" your crosshair like what happens with a y/x ratio change. Using legacy threshold-based angle-snapping is a bad idea since it is extremely flawed and simply obfuscates angles below the set threshold - which is doing nothing except reduce the fidelity of input. There could be less compromised ways to facilitate a larger / more forgiving angular window to move along an axis, but none that are available at the moment. -

Using software like CheatEngine to 'hack' my sensitivity.

TheNoobPolice replied to bread94's topic in Technical Discussion

Yes! Well, everything that actually affects your mouse input. The GUI which is just used to visualise and apply settings is in user space. Obviously not as that would be ridiculous lol. When you press "Apply" all profile settings are written to the driver, and there is a 1 second delay until they are operational. There is no delay in use. -

Dealing with mouse drifting while in motion, at wits end.

TheNoobPolice replied to sqwash's topic in Off Topic Discussion

I'm very sceptical with "feels", I don't even trust my own. My perception of sens can change day to day depending on how tired or alert I am, and I don't see why this wouldn't also happen to other people. Sometimes the same sensitivity feels sluggish when tracking, sometimes it feels too responsive. The one thing that tends to feel consistent is off-screen movements like 180's which are more of a "muscle memory" (urgh, hate the term) but for the on-screen "hand-eye coordination" aiming aspect any human perception is not the stable barometer. So like I said before your feels can't be debugged, and if it doesn't feel good you should just change the sensitivity or some other factor such as mouse shape / weight / FOV etc etc. I have actually been trying out a way to scale sens outside of directly axial movements to accommodate for subtle human perception changes in day-to-day feel, using linear space (p-norms) principles but with slightly different scaling, I think it works nicely but would need more time with it. Idea is one could scale up and down a slider depending on their feel without changing their 180 sens or vertical (read recoil control usually) movements. I'm aware of games that have done things like this with the camera that wouldn't show it measurements on sites like this, and I'm sure DPI Wizard doesn't want to start measuring diagonal sens and direction also lol The MouseTester app doesn't have any interaction with the USB driver or version. It performs a standard read of Raw Input to find distance deltas and uses QPC() to find time deltas (0.1 microsecond precision). The USB 3 spec itself should not make a mouse behave oddly on paper, but it's down to each vendors implementation of it at the end of the day. -

Using software like CheatEngine to 'hack' my sensitivity.

TheNoobPolice replied to bread94's topic in Technical Discussion

Ha! oh well, nevermind lol So when I refer to "scaling" it is just a term to describe "does math on". All the functions in Custom Curve operate in a single binary in kernel space beneath Windows and generate a cached table of values - only the cumulative factor for all functions you select is applied to each input in one go. When you make a sens toggle, for example, nothing is loading dynamically, everything is already pre-loaded - it is essentially different positions of the same vector (this was also a requirement for Faceit anti-cheat compatibility - nothing can be loaded to the driver faster than 1 second). -

Using software like CheatEngine to 'hack' my sensitivity.

TheNoobPolice replied to bread94's topic in Technical Discussion

Well, yes, but not really. There’s no Windows API function to change your mouse DPI. Windows itself doesn’t even know what Mouse DPI is. Only your mouse vendor driver can communicate with the device to change its sensor resolution. What we do with filter drivers like Custom Curve, Raw Accel, ReWASD etc etc, is just perform simple math on the input in kernel space before it reaches the core of OS input processing (we refer to this as “beneath Windows”). For all intents and purposes it would be more useful to imagine those filters to exist along the cable between your mouse and PC, because any application that runs on Windows and gets input in any normal way cannot even know the input is modified. So this is why we refer to it as “scaling DPI” - but your mouse is still always operating at the same DPI internally. I’ll go over an example one more time, and if it still isn’t helpful I probably can’t think of a better way to explain it: Let’s say you want to cut the game sens in half dynamically on a keybind and let’s imagine we can do that. The sens you want when the key is not pressed turns 0.2 degrees each input and when the key is held turns 0.1 degrees. Your mouse is at 400 dpi for arguments sake, so upon moving the mouse, nothing happens until you reach 1/400th of an inch distance on the mouse pad, which then sends an input and turns 0.2 degrees when the key is not held, and 0.1 degrees when it is. Now, back to reality; we can’t actually modify the sens in game. So instead, we can set the game to the lowest sens of 0.1 degree and we can set a scaling factor of 2x the effective DPI with Custom Curve that we then set the keybind to in order to dynamically cut in half back to 1x when held. This means that when the key is not held, 1/400th of an inch sends 2x inputs instead of 1 in a single packet, so instead of turning 0.1 degree, the game turns 0.2 degrees. When you press the key, this goes back to normal scaling, so the game turns 0.1 degree for every input, which is exactly the same result as if we could modify the game sens directly, with exactly the same granularity to hand motion - if you could compare the two frame by frame, there would be no difference at all at any point. -

Using software like CheatEngine to 'hack' my sensitivity.

TheNoobPolice replied to bread94's topic in Technical Discussion

This is correct - but this is also just the increment resolution I mentioned; so you just set the game to the lowest of the sensitivities you want. You could then have the DPI scaling in Custom Curve to start at 2x, and then scale down to 1x on your modifier key / button for the exact same effect as the sens moving between 1 and 0.5 in game with DPI scaling at 1x. Thanks, my main contribution is the Bias mode (technical explanation) that changes vertical sensitivity scaling from a component multiplier to a directional weighting, which should be the standard way to do it in my opinion. A lot more people would benefit from differing vertical sensitivity if it didn't normally change the diagonal direction of their cursor, as it makes things like tuning recoil control much easier, or for in games that have a lot of verticality whilst still requiring aiming accuracy that requires low horizontal sensitivities. -

Using software like CheatEngine to 'hack' my sensitivity.

TheNoobPolice replied to bread94's topic in Technical Discussion

I have contributed some features to Custom Curve since I met the owning author (through this site funnily enough) so very familiar with it, but I think you may be expecting something different to happen that actually would if you could dynamically alter game sens rather than scaling DPI... Let's say you're at 6400 dpi and the game is set to some sensitivity; for arguments sake we'll imagine source engine at sens 1, so each count from the mouse turns 0.022 degrees. This means for every 1/6400th of linear inch hand motion, 1 count is sent to the game and the game updates the camera to a new angle offset by 0.022 degrees. If we wanted to turn 10 degrees in game we'd need: 10 / 0.022 = 454.54.. 454.54 / 6400 = 0.071 inches of hand movement turns 10 degrees If you could then somehow dynamically scale the game to sens 0.5, then 1/6400th of an inch offsets the camera by only half that, so 0.011 degrees instead, so we now need.. 10 / 0.011 = 909.09.. 909.09 / 6400 = 0.142 inches of hand movement turns 10 degrees (exactly double of course) So what happens if we instead scale the DPI down to 3200? Our sens is still set to 1, so our increment is still 0.022 degrees. We now have: 10 / 0.022 = 454.54.. 454.54 / 3200 = 0.142 inches of hand movement turns 10 degrees - exactly the same hand motion produces exactly the same turn. So I am not sure what kind of muscle memory you think could be affected by changing DPI, that wouldn't occur by changing the sensitivity? The only difference is in the resolution of minimum turn, and as I previously mentioned you could just set the game to the minimum sens (i.e highest turn resolution) you need to feel smooth on slow motions, and then scale the DPI higher for the exact same effect. If you increase the DPI, the amount of data sent is capped anyway by the polling rate (the whole reason polling is part of the USB spec is to limit data bandwidth that would otherwise occur if the CPU was interrupted every time the mouse had data to send) so it would not be possible for this to cause additional jittering unless you are trying to use 8k or something; in which case, just don't -

Using software like CheatEngine to 'hack' my sensitivity.

TheNoobPolice replied to bread94's topic in Technical Discussion

Why would it be required to scale sens and not DPI? If you want a smaller turn increment, just set sens to the lower value of whatever the change is. You could load the rawaccel profile only when playing the game or even script it trivially with something like Autohotkey to load both the game and the profile automatically, this is much safer and would work better than trying to modify game memory using cheat engine. -

Dealing with mouse drifting while in motion, at wits end.

TheNoobPolice replied to sqwash's topic in Off Topic Discussion

Well it's not really scientific, one of the main reasons is simply because Windows is not a RTOS and is objectively bad once timings get tight (note: there is one timing procedure called the Performance Counter which usually has 10mhz precision these days (it depends on hardware), but this is only really used for elapsed time measurement and can't be "spun" to delay a thread without high CPU usage, so most timing functions called by programs will use lower-resolution procedures). A variance in poll time affects what distance is reported (i.e relative to what should be reported) when the OS gets data from the mouse. There may also be some per-mouse / on-board firmware differences between the selected polling rates which I don't have any insight on as isn't an area I am directly familiar with, but the way it generally works is the USB device has to flag an interrupt to the CPU in order to process any data sent/received and this can be deferred/delayed by the CPU for any reason - and in a Windows environment these delays happen a lot. People have ran tests in other environments using the same USB spec and they can observe microsecond precision, so USB itself is not the issue here. In Windows, if you use a mouse with 8k polling, for example, you will see totally crazy input cadence. Windows doesn't handle it well enough even just to update the cursor position every 125us, and especially games don't. A lot of the "better smoothness" difference people feel with 8k is actually just dropped packets - an objectively worse performance that they convince themselves is better - instead of every input arriving 125us, you will get some that arrive in like 40us or faster and some that take more than 500us, and any game most likely won't be able to process the former inputs unless it does a buffered read of raw input (which is by no means standard / common, and this by design pre-buffers inputs into an accumulator outside of the game which totally misses the point of what people think high hz is doing for them in-game anyway). Anyway, I digress. I still use 500hz because it pretty much works well in all games no matter how they get input, results in low CPU thread usage, and is always going to be significantly above the max of any game frame rate I play and my monitor refresh rate which is the only important barometer imo.