CaptaPraelium

Premium Members-

Posts

219 -

Joined

-

Days Won

5

Content Type

Profiles

Forums

Updates

Release Notes

Store

Everything posted by CaptaPraelium

-

Posting this separately because I can't figure out how to do spoilers and honestly I'm not even sure I should mention it hahaha <spoiler>I'm hesitant to mention this because I want to avoid any kind of arbitrary numbers involved in this, but yes it will be possible to focus the balance on a certain area of the screen (or beyond it). I mention this because, as sammymanny has outlined above, use cases vary. I mean I practically always flickshot...and practically never beyond 50% of my screen height. So do I really want to sacrifice accuracy within that 50%, in order to be balanced with the area beyond it? I don't know. This should also have the interesting sideeffect that if one sets the inner and outer limits to the same amount, we'd achieve the same results as monitor matching.So, using my "never flick past 50%" example, if I set both to 25% and 25%, then I'd get the same result for all scopes as 25%MM. But if I set it to 0% and 50%, it will find a value inbetween those two areas of my monitor, for each scope, which is the most balanced between 0 and 50% of my screen. Like I say, I'm hesitant to introduce arbitrary variables here, but there are arguments, not limited to use-case, for biasing towards certain areas of the screen: Really though, using bounds of 0% and 100% should work out fine. It already naturally balances toward the centre as a result of the nature of the projection (consider how it stretches the image at the edges). In fact, the results I've seen so far suggest that it converges toward 0% .. while diverging from zoom ratio. That's pretty special. Anyway now I'm diverging from the topic For now I'm just sticking with vertical 100% but there's also horizontal screen space to worry about. And then there's balancing between them, too, because rectangular screens are definitely a thing. Not to worry, the formula that comes from doing this first part will be applicable to the rest of it.</spoiler>

-

You can't. It's not possible. But what we can do, is make it as 'perfect' as possible. We can do this by starting with a formula which encompasses all scenarios, and that's what we call monitor matching. The scenarios could theoretically reach off the edges of the monitor (consider 100%MM on a 4:3 screen, that's off the sides. It's also off the top and bottom edges of a 16:9 monitor), or be constrained within the limits of the monitor, whatever. But we have a formula which tells us what is 'perfect' for any *one given scenario*. This is where we get our monitor match percentage, eg 0% is correct for the scenario where the target is at 0% of the width of the monitor, 50% is correct for the target halfway to the edge, 100% is correct for the edge, etc. It also tells us how 'imperfect' it is for *all the other scenarios*. We find the balance point between right and wrong. Essentially this will end up with a formula which is no longer "too fast at low zoom" or "too slow at high zoom" or "good for tracking but not for flicks" or "good for flicks but not for tracking" as we have seen previously, but instead it will be "too fast in the middle and too slow at the edge", but the "too fast" and "too slow" will balance out perfectly, so it will be not too fast or too slow, given "all scenarios", and it will be balanced between tracking and flicking, etc. It's the 'Goldilocks' formula. Yeh I think it just got a name XD So, how can we make the ratio perfect for all scenarios? We can't. Ever. But we can define those scenarios and most importantly we can find the most balanced ratio for them.

-

Well the other methods work well in their intended functions. This one just does the job of matching sens between FOVs, in a different way. Think of it like this (I simplify here but you get the idea): No formula can ever be perfect all of the time. So we have to make some sacrifices, and try to gain whatever we can. 0% is always perfect at the centre of the screen. But it is always imperfect everywhere else. 56.25% is always perfect at the top and bottom of the screen. But it is always imperfect everywhere else. This formula would be perfect in different places depending on the zoom level. But it is always imperfect everwhere else. ... But, if we add up all the imperfections of the others, and all the imperfections of this, then this one will be the least imperfect. Honestly, the most important thing is that a player is accustomed to whatever settings he has. It really doesn't matter what those settings are, if you are used to using those settings, then you will be best with those settings.

-

Right, and that's why I'm not using monitor matching. I refer to the results of the formula as a monitor matching percentage because, as you said, that's what people are familiar with, but if you read what I'm talking about, I'm just scaling the sensitivity to the value which produces the least error (ie it is the most correct, mathematically) in the projected view, so that it's not some arbitrary value. Since we're talking about the view projected onto the monitor we can translate that to a monitor match, but that's not what it is really. This same formula can be extended beyond the extents of the monitor or constrained to a portion of the monitor (and not necessarily the centre, either), not that there would be any reason to do that but the point is that it's not based on any pseudoscience or made up numbers and it does not ignore the flaws in any particular formula, instead it accepts that all formula are flawed (an unavoidable fact) and finds the optimal result. What I'm talking about does not care where you measure the FOV, screen aspect ratio, screen distance (well it does care about that but it makes no difference). It simply finds the least erroneous aka most correct sensitivity. This is exactly the thing I am avoiding here. The fact is that no situation is ever going to be perfect. For example we all know that 0%/zoom ratio/focal length ratio/pick a name, gives perfect tracking. We all also know that perfect tracking is literally physically impossible. If we are to avoid pseudoscience, we must accept that there will always be an error, and then attempt to minimise it as best as possible. That's what this formula does.

-

For reference for the non-skwuruhl-level nerds among us, and maybe to help what I'm saying make more sense to skwuruhl because I don't speak mathanese What I'm talking about here is using this: https://en.wikipedia.org/wiki/Mean_value_theorem This page has some nice pictures that help it make sense We can specify the centre and the edges (vertical being 1 and horizontal being 1.7777 or whatever as determined by your aspect ratio) of the screen as a and b in that function, and get our f`(c). I just don't have a formula to get our value for c (and accordingly f(c)) from that. Once we have that formula, what we get is some math that does this: "I only care about 'this' part of the screen. I want to convert between 'this FOV' and 'that FOV'. What is the sensitivity that will be the most correct, across the part of the screen I just said?" ... and it will spit out an answer. Basically, we're taking the guesswork out of "Which monitor match percentage is best?". We already know that it's going to be close to 0%. It will just shift outward for high zoom scopes.** But we can quickly look at the graphs above and predict - it won't be much. 0% is darned close. ** Actually it shifts inward toward 0%, but the effect is that it is a number that moves away from the 0% line as zoom increases.

-

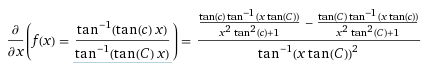

I call it 0% just because that's what most people call it. I call it zoom ratio when I address you because that's what you call it Same same, different name! 56.25% aka 100% vertical could be "angle ratio" and explain its beneficial aspects much better. I've used the MM %ages above because I'm drawing comparisons between them all. Have a read of my post above, or actually you're a math nerd you might just be able to construe it from the graphs. It's easy to find certain scenarios where certain formula might seem correct but we know that none of them ever will be. It just isn't mathematically possible. Using that video as an example it looks great. But we don't move when we ADS. If we went from a 120FOV hipfire to an 8x zoom in that example we'd have to move back some 400m before it seemed correct XD And then it would only be correct if we tracked the target perfectly (which is physically impossible), and once the target leaves the centre of the screen it gets to feel way too slow, and at that zoom ratio (>20) it does so very quickly. We've observed this experientially, and now I've shown mathematically why that feeling exists. And I've not even gotten into the perception of the size of and distance to the target resulting from the projection (discussed much earlier in this thread) But what IS mathematically possible is to find the least incorrect among them all. Your assistance would be appreciated! If it helps any, these are the equations in a form that wolfram likes (I think you use that? I don't know): Monitor match - C is first FOV, c is second FOV (not even sure how to place variables in there and most letters don't work is the only reason I used C and c), x is monitor height: f(x) = (tan^(-1)(tan(c) x))/(tan^(-1)(tan(C) x)) d/(dx)(f(x) = (tan^(-1)(tan(c) x))/(tan^(-1)(tan(C) x))) = ((tan(c) tan^(-1)(x tan(C)))/(x^2 tan^2(c) + 1) - (tan(C) tan^(-1)(x tan(c)))/(x^2 tan^2(C) + 1))/tan^(-1)(x tan(C))^2 Just my luck, it won't integrate it for me (exceeded computation time) so I have to do it manually.

-

I need a break from the math so I'll explain the above in more depth. As discussed previously ITT, there is no perfect way to convert sensitivity between FOVs, so the only way to have consistent sensitivity is to always use the same FOV. Since that's not possible for weapon zooms (every game zooms differently) setting the same hipfire FOV between games makes a lot of sense, since it's often the ONLY thing that can be perfectly matched. That being said, what is a useful FOV for one game may not be suitable for another, so as strongly as I would recommend setting the same hipfire FOV, I wouldn't judge anybody who chooses not to. Also, and on a related note, there is a strong argument for using ~55FOV as your hipfire FOV because your peripheral vision extends from around this angle, and it is our natural inclination to position ourselves from our monitors so as to have the monitor fill our active vision and we achieve an actual FOV of around 55 degrees. That being said, while it may be optically superior to do this, it presents some limitation on our awareness in-game, not to mention, that there are advantages in such matters as recoil control, in using higher FOVs - because the higher mouse sensitivity requires smaller movements to correct a given deflection of our point of aim - So I certainly don't fault anyone for opting for higher FOVs. Regarding the holiness of 0%MM, I will explain using the above linked geogebra demonstration; I recommend you open it up and play along, as it makes it easier to see the pros and cons of 0% when you can, well, see it. First, consider that x=0 (the Y axis) is the centre of your screen. The edges of the screen are x=-1 and x=1. So, you can imagine that you have a target somewhere on your screen, as being somewhere between -1 and 1. There is a dashed white line labelled a(x), right at the top of the screen. That is the divider for 0%MM. Note that it crosses the Y axis at the same point as the pink line. The pink line represents monitor matching at any percentage. So, let us consider targets at certain positions on screen. For a target at the centre of the screen, we think of it being at x=0. If we follow X=0 (the Y axis) upward until we hit the pink line, we can see that the perfect match for a target at that position is where the pink and dashed white lines cross, and that's 0%MM. So let's keep this related to reality, let's say we have a target and we turn to him in hipfire and we zoom in, aimed right on him at the centre of the screen because we are perfect players with perfect aim. Our FOV changes as we right-click, and 0%MM is applied, so our sensitivity is divided by the value at the dashed white line. Sweet. Except we don't control the target, and that sneaky sod strafed because he saw us too, and we do have a reaction time, so let's say we don't react to his movement until 100ms after we see it (because we are just THAT fast because we are perfect, remember?). Given that our target strafes at 5m/s, he has now moved 50cm to the right and we need to adjust our aim before we fire because we're not aimed at his head any more. So, our target is now at, let's say 10%, which will be on the first grey grid line to the right. So we follow that line up and we cross the pink line, indicating the correct MM %age, before the 0% dashed line. What does this mean? Well, it means that our sensitivity, since we use 0%MM, is a little bit too low, because the 0% divider we have set up is greater than the 10% divider we need, to hit the target. But you know what, it's pretty darned close. 6.46 vs 6.4. There ain't much in it. 0.06*zoom is our error here. OK well let's say we aren't perfect, maybe we had a late night, let's say we were a little off-centre when we zoomed in on our target, and we took a little longer to react to his movement. Let's say he's at 20%, the second grey line. We follow the line up, same as before, and we're now at about 6.25. OK now our error is 0.2*zoom. That jumped up a lot from last time. What about if it's more of a flick shot? We're hardscoping a doorway and oh snap, the enemy came through the other door. Have to snap to 30%, the third grey line. We follow it up and now we're at about 6. That's getting EVEN FURTHER from our 0%MM settings. Now our error is 0.46*zoom. Notice how, the further the target gets from the centre, the more wrong it is? We're accelerating away from 0% being correct. This is where the purple line down the bottom becomes important. It shows us the slope of the pink MM line. As long as that's going away from 0, things are getting worse, faster. The place where that purple line peaks, is the most correct/least wrong, considering the entirety of the game world. So, as it's saved, that chart shows 80FOV hipfire and 14.8 for the zoom, which is my 4x scope. Let's not be too sensationalist here, let's grab that fova slider and slide it up to say 55, let's say we're using ironsights. Note now that the pink line stays quite close to the 0% dashed white line, for quite some distance out. The purple line is flatter. Things are less wrong at closer FOVs. But OK what about an 8x zoom? Grab that fova slider again and this time take it down to say 4.8. Well, dang. That's not good. The purple line is spikey as heck, and you have to scroll up just to see the top of the pink line and wow, it's a spike. It just touches the white dashed line and then takes a quick trip into wrongville. And what if we have a really wide hipfire FOV? Let's leave the fova slider at 4.8, and take the fovb slider up to 120 for tryhard hipfire. Well, that ain't good, is it? We can see clearly that the more distant our FOVs, the more wrong 0% becomes, faster. Now we can just go for the least wrong thing, and that would be the place where the purple line peaks, which is insanely close to 100%MM (vertical. I don't know why the calculator uses horizontal. it's 56.25% in the calculator, assuming a 16:9 screen) for most of the time. But that's the least wrong across the ENTIRE game world, including everything behind you. And let's face it, that should be a problem you deal with in other ways (awareness, turning when hipfire, etc). We're much more concerned with what's actually on screen, especially since that is what's effecting our perception of the game world and requires a change in sensitivity at all. If we're adjusting for our sensitivity based on things behind us, it comes at the expense of accuracy to things in front of us, which would be of very questionable value. So, what we need is a formula that works like the purple line, but only considers the on-screen game world - and returns the peak of that curve, to tell us what our sensitivity should be, to get the least amount of error aka the most accurate sensitivity. No, I don't have that formula YET, (Still taking volunteer help. Anyone? Anyone at all? feelsbadman) but we can make observations from what we have here that will give us some hints: At lower zoom levels, where the zoom is closer to hipfire FOV, the value will be quite close to 0%. As the zoom levels increase, and the difference between hip and zoom FOV increases, the value will depart further from 0%. This comes as no surprise given our intuition and experience with previous testing. Flickshots under high zoom have always felt better at higher MM%. Tracking at low zoom (or by increasing distance from the target as demonstrated in the Wizard's recent YT video) has always felt great at 0%. So is 0% the holy grail? No. There is no doubt that it has its imperfections as all formula always will. But in certain scenarios, it's darned close, and we can expect my formula to result in values close to the values of 0% for low zoom FOVs. Likewise, while 0% is perfect for a 2D world and fails in 3D, 100% vertical aka 56.25% horizontal @16:9 is perfect for angular displacement, but ignores the 2D projection we are looking at, and angular distance is a thing (see links earlier in the thread), and our eyes will lie to us about the required rotation to a target, so it isn't the holy grail either. But at higher zooms, and when snapping not tracking, it has its temptations. The holy grail is somewhere inbetween - and it's different for every scope.

-

Well, none of them yet.... The intention of this thread is to develop a new formula which results in the most correct sensitivity. I don't want the thread to veer offtopic again, but... If I had to pick one right now: Either 0% or 100% vertical (aka in the calculator as 56.25% horizontal at 16:9) Monitor matching. 0%MM gives us the perfect match for the centre of the screen (and therefore perfect tracking if the target never leaves the exact centre of the screen) and if the 3D game were 2D that would be the end of it. 100%(aka 56.25%) gives us a division of the FOVs which results in the correct movement considered as angles, however the distortion on screen means that it is not always intuitive. 100% is also the most correct across the entirety of the game world, however we are usually most concerned with the part of the world which is projected onto our screen. TL;DR If you want good tracking go 0%MM If you want good flicking go 56.25%MM If you want some rough approximation of "most correct" go 28.125%MM If you want actual "most correct" we don't actually know yet, and I would love help with the math

-

Life is hard but I'm not dead yet. Just for proof: https://www.geogebra.org/m/hgbtnmqb Pink line is monitor match X axis is monitor height (so x=1 is full height of your monitor) Purple line shows the slope of the pink line Hidden stuff is just to confirm that cslculations work out the same if taking into account the distance from the monitor Have a play with the fova and fovb sliders. Values are currently at 14.8 (BF 4x scope) and 80 (my hipfire) but most insight is gained from very distant values. Try 120 hipfire and 4 for a high zoom. You can see how far it gets from 0%MM, and how quickly. This is a fun one because it serves as proof of several previous assertions made ITT: 100%(vertical) MM is just division of the FOV angles Correct sensitivity divider approaches zoom ratio as we approach centre of screen Correct sensitivity divider approaches 1 (1 meaning same sensitivity for all zoom levels) as we approach 180degrees from centre, regardless of the configured FOV 0%MM becomes increasingly incorrect as the target moves away from the centre of screen We can quantify an average error in monitor match ** Minimal error for any target at any point including off screen is 100% (vertical) MM aka just divide the FOVs Different zoom FOVs should be monitor matched to different % in order to minimise the error at any point on screen ** This will be the indefinite integral of f(x) = atan(tan(fova/2)x)/atan(tan(fovb/2)x) with limits 0 and 1. The inverse of that function will provide us with the MOST correct monitor match percentage for the given FOVs. Yes, I tried throwing it at wolfram and exceeded computation time. Damn. If some lovely person would like to find that formula, we've got our minimum error formula.... Otherwise I'll re-learn more math and let you know when I'm done

-

BF1 vehicle calculations are broken

CaptaPraelium replied to CaptaPraelium's topic in Feedback, suggestions and bugs

Little bump Trying to do some new calculations now but this is still broken -

BF1 vehicle calculations are broken

CaptaPraelium replied to CaptaPraelium's topic in Feedback, suggestions and bugs

It's from hipfire to tanks, and hipfire to cavalry. I'm sure it worked before because I did it before and it's OK (in my config file now) but I punched it in just while I was there doing something else and it gave me the error. -

I'm getting an error that the calculated sensitivity is too high... but I'm sure it's not, I've done this same calculation before and it worked OK. It's telling me I need to go up from my usual 800 up to 6400 DPI XD

-

Hi guys. I have been very sick and unable to really work on this recently. I hope to be able to get back to it in a week or so. Might I ask that if you want to go offtopic, you could please create a new thread? There are a couple pages of 'muh feels' and 'how do I convert X to Y' in here and it's really quite far from the topic of this thread. Makes it hard for me to pick it back up when I recover. Thanks in advance

-

This is what I've been getting at. We choose to match based on some percentage of the monitor space... Uhm.... Why exactly? What's so special about the boundaries of the display? Not only are they determined by the monitor dimensions, but also change depending on the game context (eg, is my spaceship rolled sideways?) Matching to 100% horizontal is no different to matching some space above your monitor where you can't see. What random space? Well, that depends on which monitor you bought. I don't have much time for horizontal matching, at this point. Vertical matching at 100% does have a redeeming feature above other monitor match percentages, and that is that it matches at the point where the angle to the target is the same angle as the FOV, and that FOV is actually an input in calculating the image displayed, so it has some mathematical relevance. Setting the target in the geogebra demo, to the top edge of the screen, shows us that we get the same zoom ratio, as the direct division of the FOVs - and at this point I can't exclude the possibility that this simple solution is actually the correct formula.

-

There's a reason basically every operating system has it on. Push mouse faster, makes mouse go faster. It's very intuitive. Acceleration works entirely programmatically so theoretically speaking you could be accurate with it. If you can control not only the distance of your hand movement, but also the speed, and acceleration, it could work. Thing is, you want to remove barriers to accuracy. Have you ever compared PC shooter gameplay, to console gameplay of the same game, and been like wow, mouse aim makes this completely different? I mean we all know controllers are less accurate but, think of why they're less accurate - because not only do you have to control the distance of the stick throw, but the speed and acceleration. That extra effort is why they're so much less efficient, and it's the same extra effort required to use acceleration. If I had a trackpad though, where I could completely control the acceleration algorithm....Don't get me started. TL;DR Acceleration is like using a controller.

-

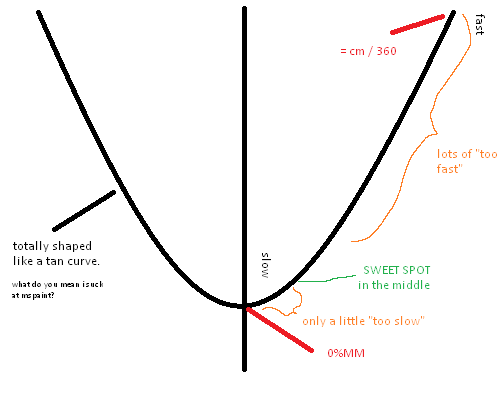

This is what I'm trying (badly, I know) to explain with the crappy picture above. You're actually both right. If everything we were doing were a 2D image, which in fact it is because it's on screen, 0%MM would be correct. Heck, that's exactly how 0%MM works, it treats the game world only as it's 2D projection and tells us how much we zoomed it in on our flat screen. But, because of the distortion we experience by viewing it as a 3D scene (but not changing our focal point when we change FOV, ie, sitting in the same place all the time), the image becomes stretched, so the cursor feels like it is moving through the 3D space at a different speed. The nature of that 3D space is determined by the distortion, so the distortion is controlling the 'too fast/too slow' feeling we get. (and it's not necessarily just the distortion of our seating position - here, I am referring to the difference in distortion between two FOVs) There's good reason why gear ratio/1:1/100%VFOV/4:3 feel closer and seem to move the image at a speed that feels right. 0%MM is always exactly right for anything directly under the crosshair. But for anything away from it, 0% is too slow, and the further it is from the crosshair, the more 0% is too slow. As we monitor match further and further from the crosshair, we get higher and higher sensitivity. If we 'monitor match' at 180degrees - even though that's not on the monitor - what we get is a sensitivity divider of 1 - as in, SAME sensitivity, same cm/360, for any FOV. Which is obviously too fast. So, what we have here are two known sensitivities. At the centre of the crosshair, we have 0%MM. This is as slow as it gets. And at the very edge of the game world/our turning circle, we have =cm/360. This is as fast as it gets. And this makes sense. There's no reason we would ever want to go below or above these speeds respectively. So let's graph out a line between those. In my crappy mspaint, it's shaped like a tan curve (inverted at the Y axis because it's more intuitive to think of it as 'left X'' than 'negative X'). Somewhere along that line, is a place where it's a little too fast, but not too close to the =cm/360 extent; and a little too slow, but not too close to the 0%MM extent.. That's the sweet spot.... and those places where drimzi's muh feels and clever LGS script make it seem correct, they seem that way because they're close to that sweet spot. The geogebra demo I did, shows hints of all of this, but it's not always immediately apparent. We can demonstrate it though. I'm still working on being able to show my working and give an exact formula that gives exact sensitivities to use.

-

Yep. The reason 0% works like this is because it is at the extent of logical sensitivities - the lowest extent. Everything is too slow at the centre, and moves toward being too fast as it moves outward. Because of the way the distortion curves, the errors in sensitivity curve the same way. So most of the error is away from the centre. note that the 'sweet spot' is closer to 0% than it is to the opposite extent. This is why, if you choose one extent over the other, 0% wins easily. The trouble is that we don't continually re-assess our position. We make estimations and move, then re-assess it. Think of a 'flick shot'. You don't slowly move the crosshair over the target, you make one singular 'flick' of the wrist, and click a few buttons, all in one instant action like it's packaged up in a bow. If the system is that we constantly check the presence of the target under the crosshair, literally any sensitivity will do.

-

I don't know where you got this idea. Aim is critical especially in tanks. You should use the same formula that you use for infantry. As for movement, you do that with the keyboard..... The movement speed is limited by the vehicle, and not effected by mouse sensitivity. Experience will trump even a 'perfect' formula... But both, will trump experience alone I did cover this earlier in the thread but it's long AF, I know TL;DR is that our brain measures distance by angle between points, so when your FOV changes, the angles change, and it messes with your perception of distance. Distance and speed are linked, so it changes your perception of speed. If you sit far enough away, pixels will be indistinguishable, but the effect remains. This thread is about doing things as scientifically soundly as possible. If we don't propose and test hypotheses, we're doing it wrong! Don't be afraid to speak your mind and make suggestions, if you're wrong it's just a step toward being right, and if you're right, you'll be helping a LOT I don't know if I'd call it a controversy, but it's definitely the 64,000 dollar question XD

-

Oh trust me, I'll be using a computer to solve them. I'm no sadist. But I need to MAKE them first

-

Updated version of the geogebra demo. https://www.geogebra.org/m/adAFrUq3 I need to re-make this from scratch with three, linked targets with positions relative to each of the three focal points. It's a lot of work, it's gonna take a while. Meantime, this version allows us to reposition the target to be off-screen, to demonstrate the way the angles scale beyond the visible parts of the projection. It's *far* from perfect but it gives some idea. Funny story. You know how people learn calculus in school and they're like "I'm never gonna need this after school this is stupid"? Well, not me, I was like, 'I need this for my career'. Decades later, different career, and I'm now having to relearn differentials just for this nerdery. The irony. lol.

-

This has been covered in other threads, I think we're pretty far off topic here sorry.

-

Theoretically yes. In practice of course this is never possible...well never practical to move your seat around, and that's why we're nerding out in here trying to find the 'next best thing'

-

//My monitor resolution widthpx = 2560 heightpx = 1440 //27 inch diagonal, converted to centimeters because Australia If you prefer inches, just don't do *2.54. diagcm = 27*2.54 //Distance from monitor to eye, also centimeters in this case, measured with a piece of string... //actually used my headphone cable and a borrowed Hannah Montana ruler XD Super high tech here viewdistance = 36 //Calculating monitor height from this information so it's more exact than just measuring it. We need monitor height because FOV is usually measured vertically. heightcm = (diagcm/sqrt(widthpx^2+heightpx^2))*heightpx //Calculating the actual FOV of the eye to the monitor based on the above actualfov = 2(arctan((heightcm/2)/viewdistance)) //Calculating the focal point (ie, the 'optically correct distance' as Valve says it) for a given VFOV opticallycorrectdistance=(heightcm/2)/(tan(vfov/2))

-

You're not stupid It's confusing AF. Short answer is no. Your eye FOV would have to change to match the current FOV (read: you would have to move your seat back and forth when you right-click and un-right-click)

-

Yes, you're quite right - the distortion is unavoidable. We can never fully avoid the effects of it, and so I am attempting to minimise those effects. The issue with monitor matching, is that it does not function in the same manner, when considering the distortion on both the horizontal, and the vertical planes. As skwuruhl has mentioned in the past, the monitor match percentages which we have chosen, be they 100% vertical or horizontal or diagonal or whatever, are somewhat arbitrary. What I am attempting to find, is a non-arbitrary position at which to match sensitivities, by matching at the position where the errors in sensitivity (too slow/too fast) are minimal across all angles of rotation, regardless of whether that angle falls on-screen at the given FOV.