What input method provides the best sensitivity in CS:GO between raw mouse input on or off, or the rinput program? Find out here!

Rinput is a 3rd party program that injects into the game to remove mouse acceleration.

Test system

CPU: Intel Core i7-3770K

Memory: 16 GB

GPU: Geforce GTX Titan | Driver: 355.82

OS: Windows 10 Professional x64

Game: Counter Strike: Global Offensive (Steam version), Exe version 1.34.9.9 (csgo)

Map: Dust II

Test method

This test is done by analyzing the amount of packets lost or gained between the mouse driver and the game, using Logitech's scripting capabilities in their Gaming Software. Each test is performed by sending 100k packets to the game.

Packet discrepancy is not the same as acceleration. Discrepancies are whole reports sent from the mouse that does not make it to the game (or in some rare cases gets doubled), while acceleration is reports received by the game, but where movement is added or subtracted to the packet.

What's not tested

Since this analysis is done directly from the mouse driver, it does not account for anything that happens between the sensor in the mouse and the mouse driver, such as USB latency and sensor inaccuracy.

CS: GO's built in raw mouse input supposedly suffers from buffering tied to the FPS, but I have not tested this, nor experienced it. Other sources indicate it might be a matter of 1-2 ms lag, which is insignificant compared to the findings here.

The tests are also done offline, so most variables caused by online play are removed. If anything, these results represent a best-case scenario.

Testing procedure

At first I was set on doing a test where 1000 packets were sent to the game, then counting the discrepancy. The results turned out to vary a lot, so I needed to increase the sample size.

I did a lot of testing with 10k packets, doing each test 10 times. The results were a lot more consistent, but very time-consuming.

So I tested with 100k packets one time for each test, and compared the results to the average of the 10k packets tests. As they were pretty much identical, I used this method for the final analysis.

Test layout

There are four input methods tested here:

-

m_rawinput 1 - CS: GO's built-in raw mouse input capability

-

m_rawinput 0 - By turning off raw mouse input, the game gets the mouse data from Windows rather than directly from the mouse driver

-

rinput.exe 1.2 - This program injects itself between the mouse driver and the game, and this is the non-sequential version

-

rinput.exe 1.31 - This is the sequential version of rinput

Each input method is tested with packet sizes of 1, 10 and 100. The packet size determines how many counts the game should move the cursor/cross hair in one instance.

In addition, each of these combinations are tested with 1, 2 and 4 ms delay, equaling polling rates of 1000 Hz, 500 Hz and 250 Hz.

And lastly, all these test were done both with and without vertical sync (vsync). All vsync tests were done with triple buffered vsync @ 120 Hz, but I've tests different refresh rates, and 60, 120 or 144 Hz does not make much of a difference.

Results

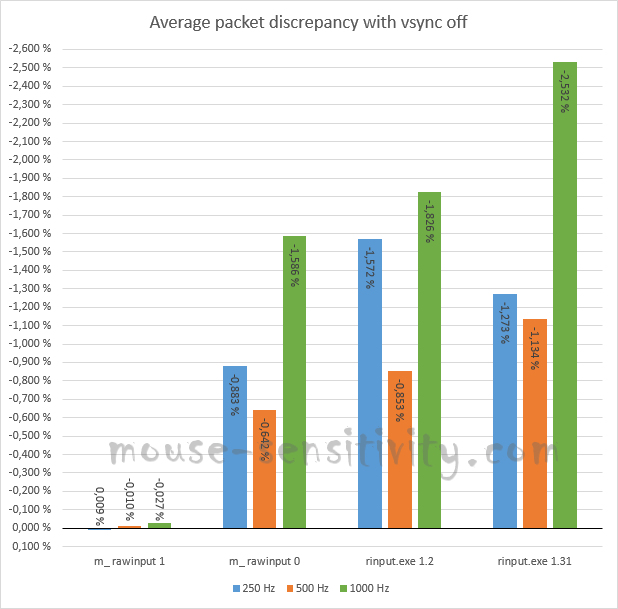

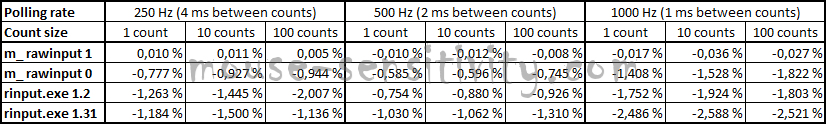

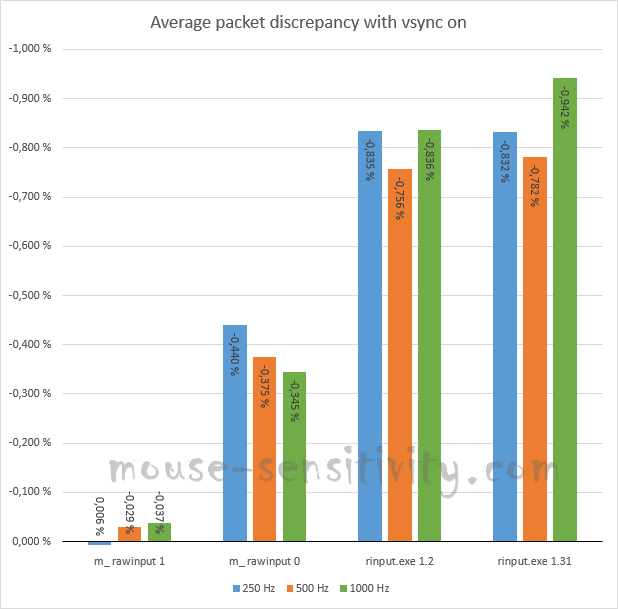

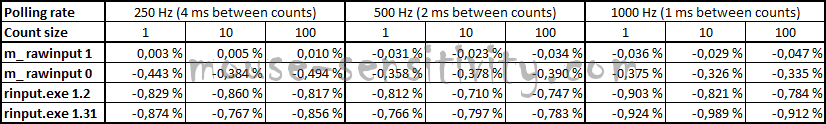

These graphs show the average discrepancy for each polling rate. The numbers are averaged from the 1, 10 and 100 packet size tests. The raw data is shown under the graphs.

As these are averages, the reality is that you might experience anything from close to no packet loss, to over twice the average. For methods other than "m_rawinput 1" there is a lot of random fluctuation.

Vsync OFF:

Vsync ON:

Video

This video visualizes the discrepancy:

Conclusion

"m_rawinput 1" provides by far the most accurate and consistent interpretation of the signals from the mouse driver. All tests point to the fact that this feature does exactly what it is supposed to do; get the raw mouse data without anything interfering in between.

"m_rawinput 0" is better than rinput.exe in all tests, sometimes rinput.exe has triple the loss of "m_rawinput 0".

Rinput 1.2 seems to yield a slightly better result than 1.31, especially at 1000 Hz.

Vsync has a huge impact on everything except "m_rawinput 1".

It's important to note that 1% loss does not necessarily mean that you move 1% shorter. When you play, the packets sent from you mouse will vary greatly in size, ranging from 1 to over 1000 depending on your settings. If you lose a 1 count packet, it won't make much of a difference, but if you lose a 1000 count packet, it will make a huge impact on how far you move.

If you want accuracy, there is no doubt; use "m_rawinput 1". Around 1-2 percent packet loss is quite significant, especially since the actual loss fluctuates, and the movement will be inconsistent.

If you want some other tests done, please let me know!

Recommended Comments

Create an account or sign in to comment

You need to be a member in order to leave a comment

Create an account

Sign up for a new account in our community. It's easy!

Register a new accountSign in

Already have an account? Sign in here.

Sign In Now